To check if PyTorch is using the GPU, first, we need to check the availability of the GPU using torch.cuda.is_available() function and if it returns True that means the GPU is available. Now, we need to set and confirm the device for tensor operations.

Checking CUDA Availability

import torch

print(f"CUDA is available: {torch.cuda.is_available()}")

# Output: CUDA is available: True

Here is the screenshot that proves that CUDA is available for PyTorch:

Set and Confirm Device for Tensor Operations

The second step is to move your PyTorch tensors from the CPU to the GPU using the .to() method and verify that PyTorch operations are executed on the GPU, not the local CPU.

import torch

device = torch.device("cuda")

tensor = torch.randn(3, 3).to(device)

print(tensor)

print("Tensor is on:", tensor.device)

Here is the output screenshot:

From the above output screenshot, you can confidently say that the PyTorch library is using a CUDA device, which is a GPU.

If you are working on a Laptop and don’t have a GPU, you can go to https://colab.research.google.com/. It is a free online coding environment with GPU support, allowing you to perform every PyTorch operation on a GPU.

Checking the Number and Name of GPUs

For further verification, you can check the number of GPUs available to you. Use torch.cuda.device_count() method to get the count of GPUs available and get their names using torch.cuda.get_device_name(0) method.

import torch

print("GPU count:", torch.cuda.device_count())

# Output: GPU count: 1

print("GPU name:", torch.cuda.get_device_name(0))

# Output: GPU name: Tesla T4

The above output screenshot shows that we have only 1 GPU, and its name is Tesla T4. The NVIDIA Tesla T4 is a datacenter GPU designed for deep learning training and inference.

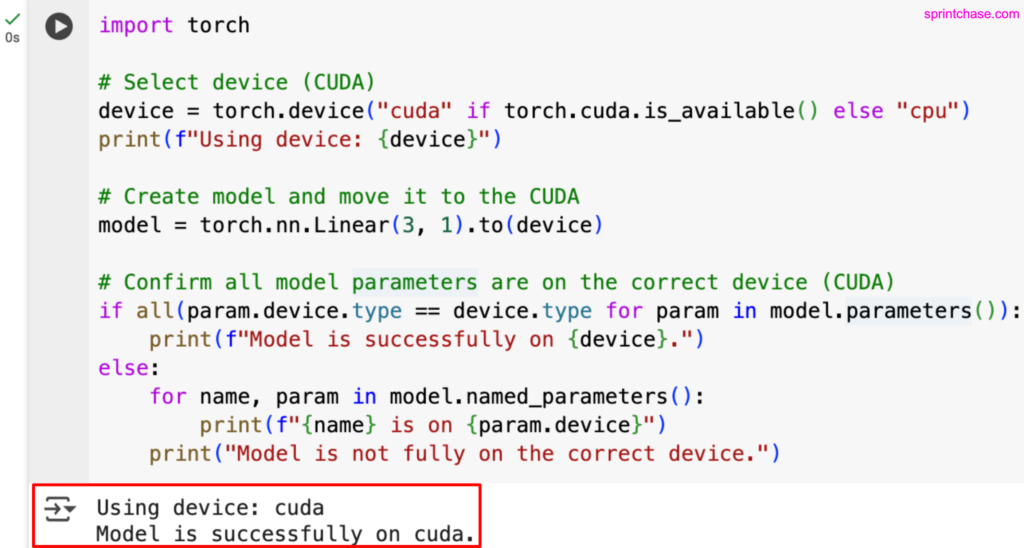

Move Model to GPU and Confirm

Until now, we have moved the tensor to the GPU for confirmation. However, in this section, we will create a model, move it to the GPU, and confirm that we are working with PyTorch on the GPU.

import torch

# Select device (CUDA)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"Using device: {device}")

# Create model and move it to the CUDA

model = torch.nn.Linear(3, 1).to(device)

# Confirm all model parameters are on the correct device (CUDA)

if all(param.device.type == device.type for param in model.parameters()):

print(f"Model is successfully on {device}.")

else:

for name, param in model.named_parameters():

print(f"{name} is on {param.device}")

print("Model is not fully on the correct device.")

Checking Active GPU Memory Usage

To check the allocated memory for the active GPU, use the torch.cuda.memory_allocated() method.

To check the reserved memory of the active GPU, use the torch.cuda.memory_reserved() method.

import torch

if torch.cuda.is_available():

print(f"Allocated memory: {torch.cuda.memory_allocated() / 1e6} MB")

print(f"Reserved memory: {torch.cuda.memory_reserved() / 1e6} MB")

torch.cuda.empty_cache() # Free unused memory

These are the solid ways to check and verify the GPU devices and their details.