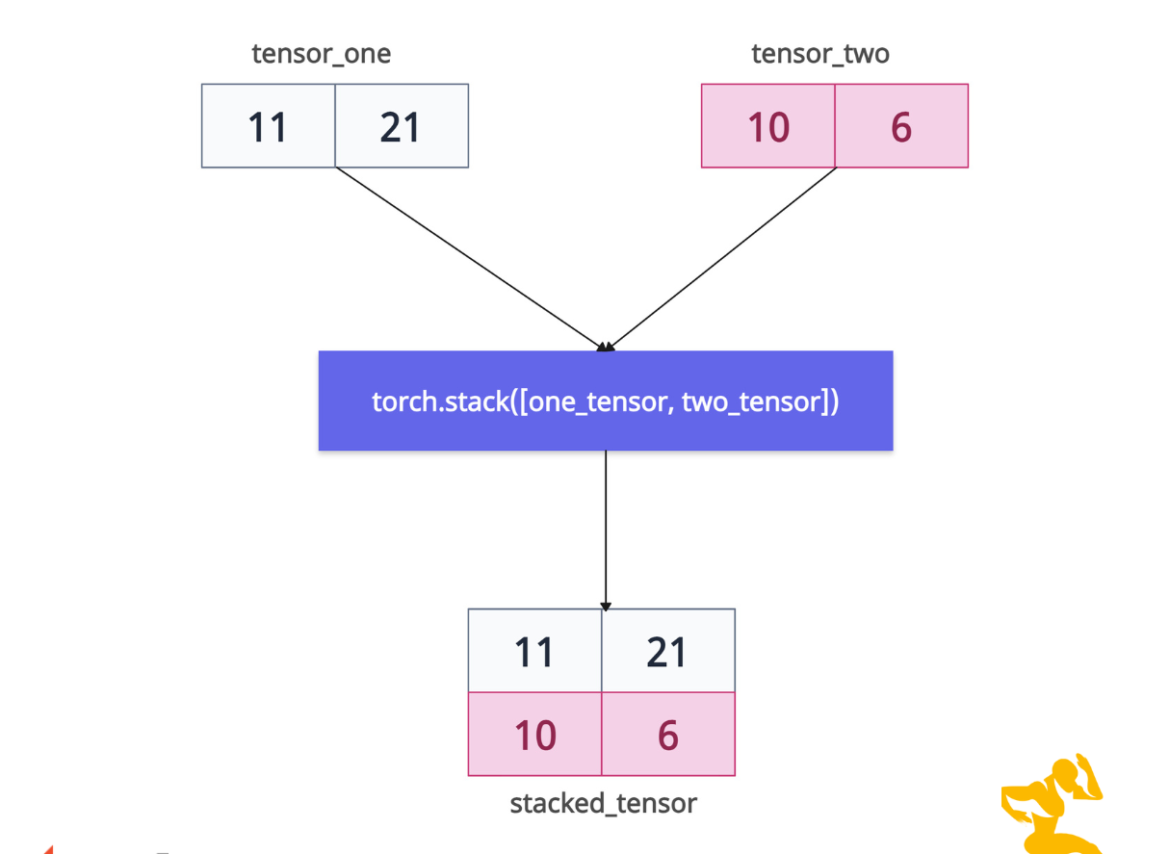

The torch.stack() method concatenates a sequence of tensors along a new dimension. The primary purpose is to combine multiple tensors into a single tensor by introducing a new dimension.

The above figure is the exact representation of how the stack() method works. The output tensor has one more dimension than the input tensors. That means you can create a 2D tensor out of two 1D tensors. For example, stacking two 1D tensors (shape [3]) along dim=0 produces a 2D tensor (shape [2, 3]).

Ensure that all of your input tensors have the same shape.

Syntax

torch.stack(tensors, dim=0, *, out=None)

Parameters

| Name | Value |

| tensors (sequence of Tensors) | It is a list or other iterable of tensors that needs to be stacked. They must have the same shape, on the same device, and have the same data types. |

| dim (int, optional) | It specifies the index of a new dimension to insert. By default, it is 0, which means it will add along rows. If you pass index 1, it will add along the columns. |

| out (Tensor, optional) | If you are doing in-place operations for memory efficiency, you can pre-allocate the output tensor and write the new combined tensor into this tensor. |

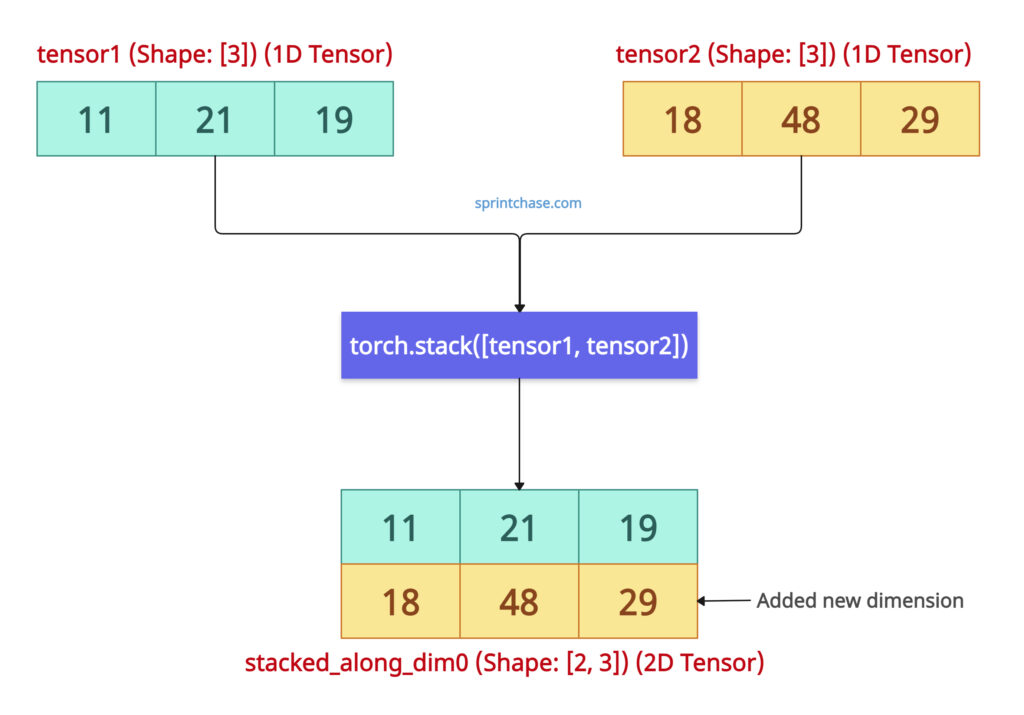

Stack along the default dimension

Let’s stack two 1D tensors into one 2D tensor, along the default dim = 0.

import torch tensor1 = torch.tensor([11, 21, 19]) print(tensor1.shape) # Output: torch.Size([3]) tensor2 = torch.tensor([18, 48, 29]) print(tensor1.shape) # Output: torch.Size([3]) stacked_along_dim0 = torch.stack([tensor1, tensor2]) print(stacked_along_dim0) # Output: tensor([[11, 21, 19], # [18, 48, 29]]) print(stacked_along_dim0.shape) # Output: torch.Size([2, 3])

If you analyze the shape of tensors, you can see that they have been changed from 1D to 2D. From [3] to [2, 3].

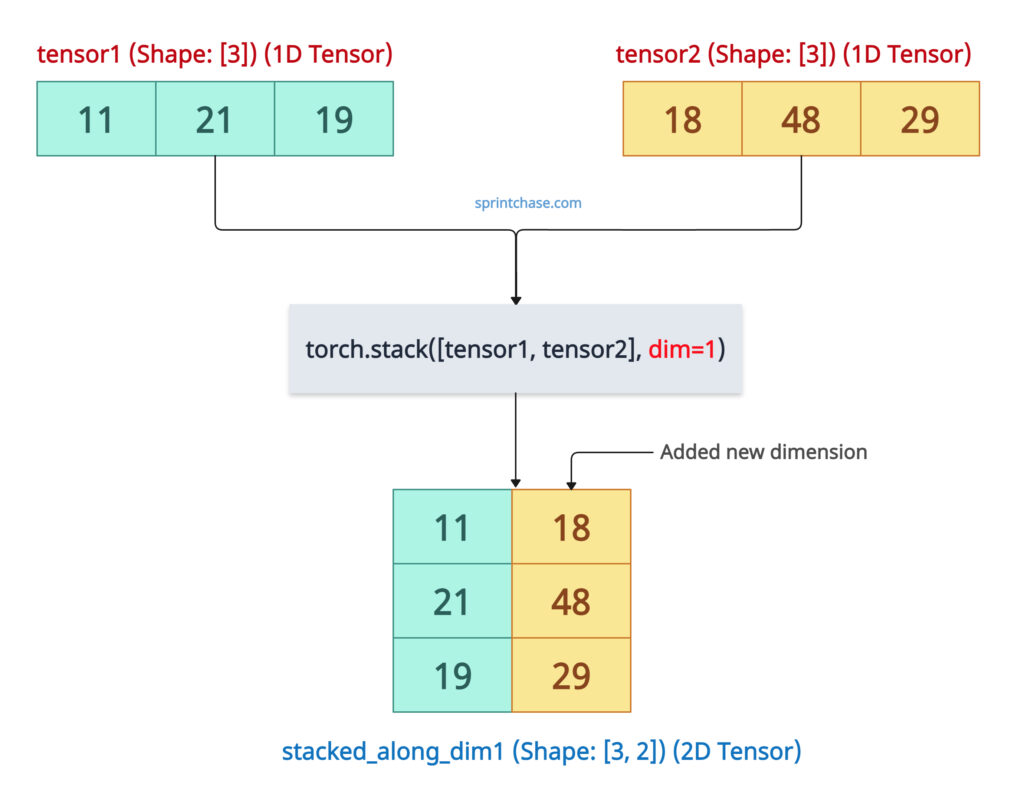

Stack along the different dimensions

Let’s stack two tensors along dim=1. It adds a new second dimension and aligns them column-wise.

import torch tensor1 = torch.tensor([11, 21, 19]) print(tensor1.shape) # Output: torch.Size([3]) tensor2 = torch.tensor([18, 48, 29]) print(tensor1.shape) # Output: torch.Size([3]) stacked_along_dim1 = torch.stack([tensor1, tensor2], dim=1) print(stacked_along_dim1) # Output: tensor([[11, 18], # [21, 48], # [19, 29]]) print(stacked_along_dim1.shape) # Output: torch.Size([3, 2])

The above output shows that each tensor is converted into a 2D row vector. It has three rows and two columns. Each row combines corresponding elements from the original tensors.

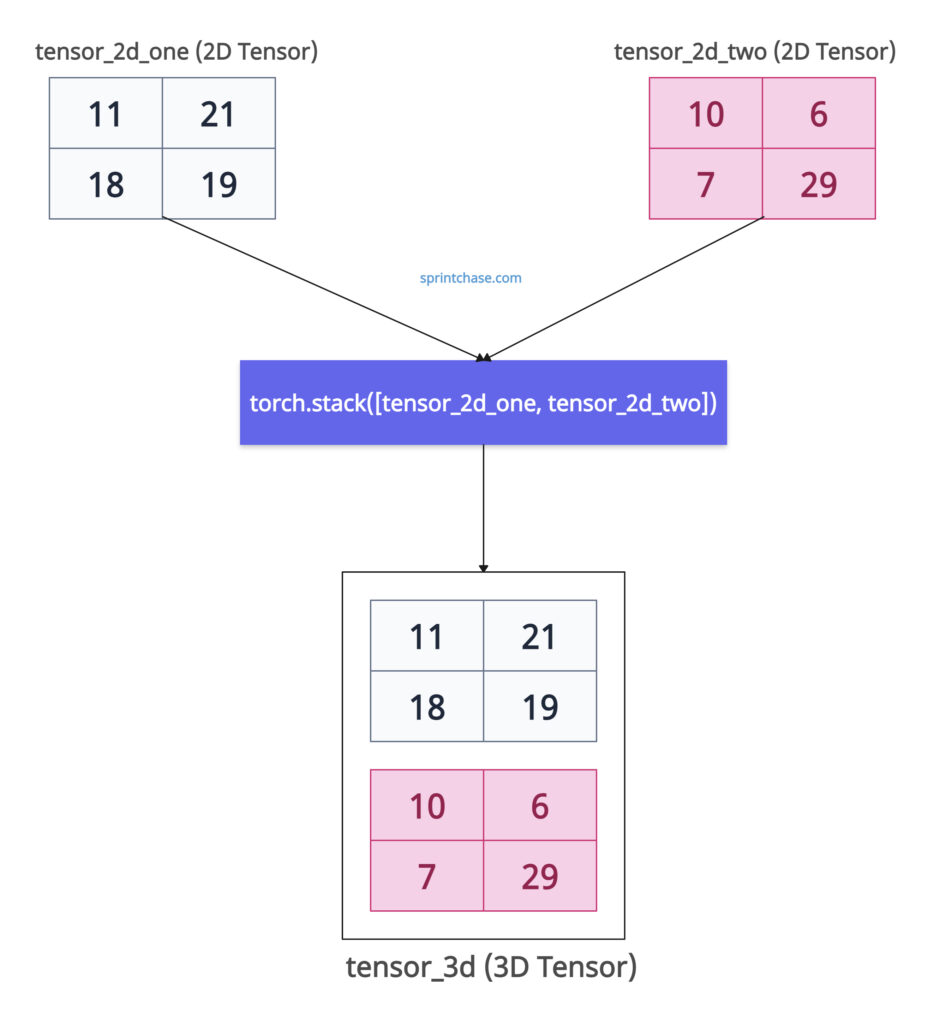

Stacking 2D tensors

Let’s stack two 2D tensors at dim = 0 and create a 3D tensor out of them.

Let’s stack two 2D tensors at dim = 0 and create a 3D tensor out of them.

import torch tensor_2d_one = torch.tensor([[11, 21], [18, 19]]) print(tensor_2d_one.shape) # Output: torch.Size([2, 2]) tensor_2d_two = torch.tensor([[10, 6], [7, 29]]) print(tensor_2d_two.shape) # Output: torch.Size([2, 2]) tensor_3d = torch.stack([tensor_2d_one, tensor_2d_two]) print(tensor_3d) # Output: tensor([[[11, 21], # [18, 19]], # [[10, 6], # [ 7, 29]]]) print(tensor_3d.shape) # Output: torch.Size([2, 2, 2])

You can see that the output tensor’s dimension is now [2, 2, 2], which is a 3D tensor created from two 2D tensors.

Stacking with dim=-1

The dim=-1 refers to the last dimension of the tensors. If your input tensors are 2D, -1 refers to the second dimension. So, we are stacking along the new last dimension.

import torch tensor_2d_one = torch.tensor([[11, 21], [18, 19]]) print(tensor_2d_one.shape) # Output: torch.Size([2, 2]) tensor_2d_two = torch.tensor([[10, 6], [7, 29]]) print(tensor_2d_two.shape) # Output: torch.Size([2, 2]) tensor_minus_one = torch.stack([tensor_2d_one, tensor_2d_two], dim=-1) print(tensor_minus_one) # Output: tensor([[[11, 10], # [21, 6]], # [[18, 7], # [19, 29]]]) print(tensor_minus_one.shape) # Output: torch.Size([2, 2, 2])

The above output shows that we have added the third dimension at the end of the combined tensor.

Stacking mismatched shape tensors

If your input tensors have mismatched shapes, it will raise RuntimeError: stack expects each tensor to be equal size.

import torch tensor_1d = torch.tensor([11, 21]) print(tensor_1d.shape) # Output: torch.Size([2]) tensor_2d = torch.tensor([[10, 6], [7, 29]]) print(tensor_2d.shape) # Output: torch.Size([2, 2]) mismatched_tensor = torch.stack([tensor_1d, tensor_2d]) print(mismatched_tensor) # Output: RuntimeError: stack expects each tensor to be equal size, # but got [2] at entry 0 and [2, 2] at entry 1

To avoid this type of RuntimeError, verify all input tensors have identical shapes before stacking.

Passing “out” argument

If you specify the “out” argument that means you will write your combined tensor into this out tensor.

You can preallocate the “out” tensor using torch.empty() method and then write the new tensor into it.import torch tensor1 = torch.tensor([19, 21]) tensor2 = torch.tensor([11, 18]) out = torch.empty((2, 2), dtype=torch.int) torch.stack([tensor1, tensor2], out=out) print(out) # Output: # tensor([[19, 21], # [11, 18]], dtype=torch.int32) print(out.shape) # Output: torch.Size([2, 2])

In this case, you may need knowledge of the output tensor’s dimensions so that you can pre-allocate a tensor of exactly that dimension.

torch.cat() vs torch.stack()

The torch.cat() method combines tensors along an existing dimension while torch.stack() method combines tensors along a new dimension.

Since, torch.cat() does not have a reshape, it is slightly faster than torch.stack() method because .stack() adds a new dimension, which adds some performance overhead.

Here is the code example that demonstrates the difference:import torch tensor1 = torch.tensor([19, 21]) tensor2 = torch.tensor([11, 18]) cat_result = torch.cat([tensor1, tensor2], dim=0) print(cat_result) # Output: tensor([19, 21, 11, 18]) print(cat_result.shape) # Output: torch.Size([4]) stack_result = torch.stack([tensor1, tensor2], dim=0) print(stack_result) # Output: tensor([[19, 21], # [11, 18]]) print(stack_result.shape) # Output: torch.Size([2, 2])

In the case of cat_result, it does not add a new dimension. Instead, it adds elements to an existing dimension. So, the output tensor is also a 1D tensor. It does not have a reshape.

In the case of stack_result, it adds a new dimension, so the output tensor is now a 2D tensor. It does have a reshape because of a new dimension.