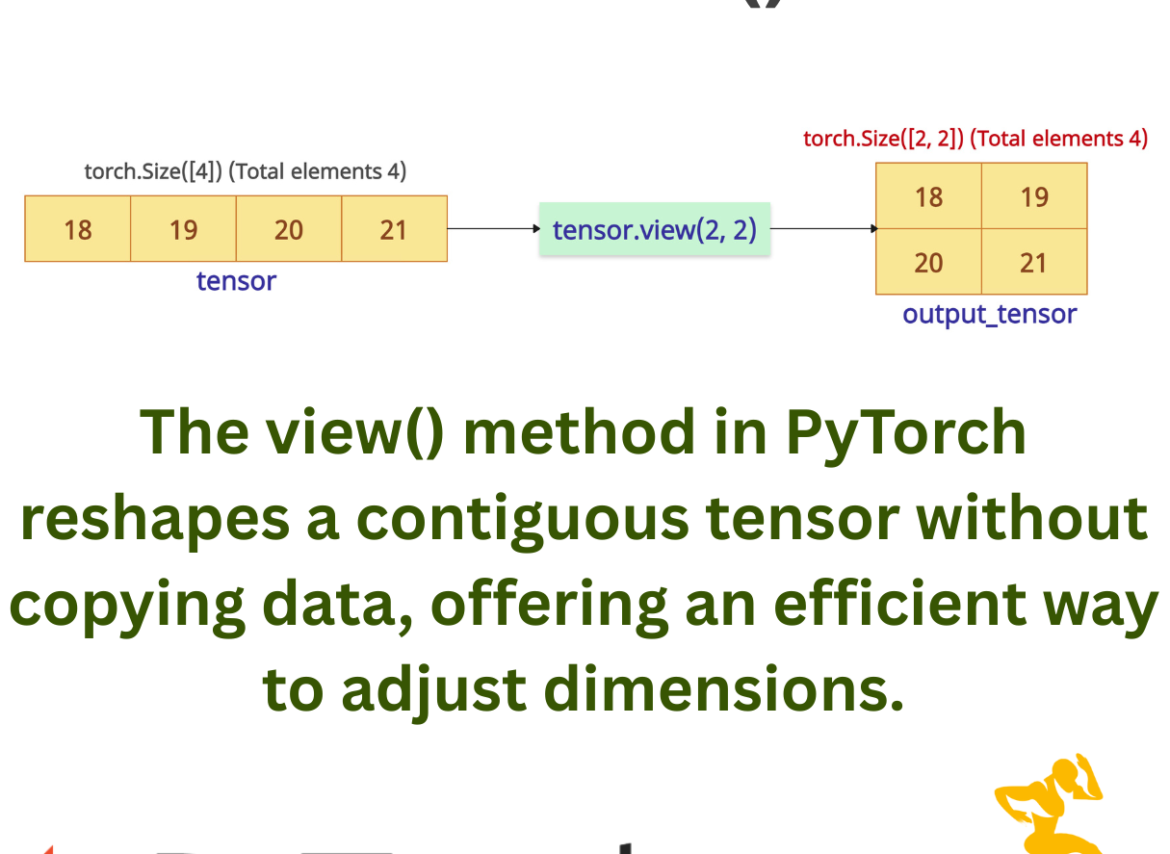

The view() method in PyTorch reshapes a contiguous tensor without copying data, offering an efficient way to adjust dimensions. It returns a new tensor underlying the same data as the input, but with a new shape.

It maintains the original data’s element count, and the tensor must be contiguous.

In the above figure, you can see that only the view has been changed from 1D to 2D. The data remains the same. It is just a view, so it shares the memory with the original tensor. This is the magic of the view().

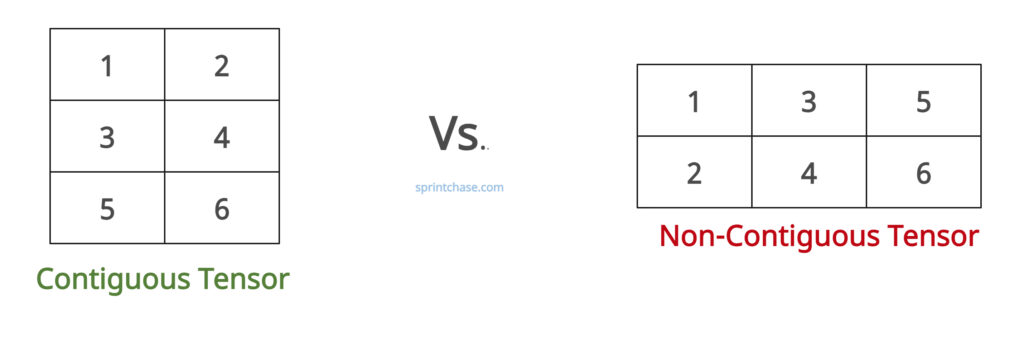

If you are working with a non-contiguous tensor, you need to make it contiguous using the .contiguous() method and then use the .view() method.

The above figure shows the difference between a contiguous and a non-contiguous tensor.

The above figure shows the difference between a contiguous and a non-contiguous tensor.

For inferring dimensions, use view(-1) (e.g., tensor.view(-1, 256)).

Function Signature

Tensor.view(*shape) → Tensor

Arguments

| Name | Value |

| shape (torch.Size or int) | It is the desired shape of the output tensor. |

Quick reshape

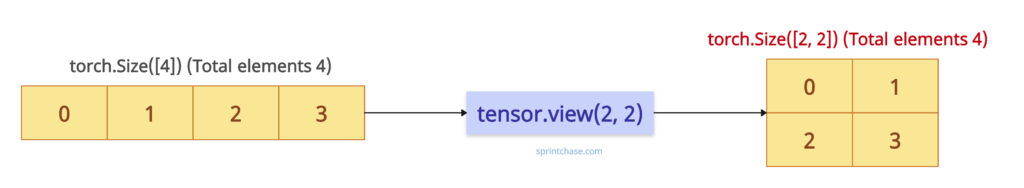

Let’s define a tensor with 1D dimension and view it in 2D without having to copy it.

import torch tensor = torch.arange(4) print(tensor.shape) # Output: torch.Size([4]) # Reshape 1D to 2D viewed_tensor = tensor.view(2, 2) print(viewed_tensor.shape) # Output: torch.Size([2, 2])

From the output, you can see that the .view(2, 2) method returns a view of the original tensor with the same data, but reinterpreted in a 2×2 layout.

The total number of elements must match: 2×2 = 4 (same as before).

The data in the normal tensor and the viewed tensor look like this:import torch tensor = torch.arange(4) print(tensor) # Output: tensor([0, 1, 2, 3]) # Reshape 1D to 2D viewed_tensor = tensor.view(2, 2) print(viewed_tensor) # Output: tensor([[0, 1], # [2, 3]])

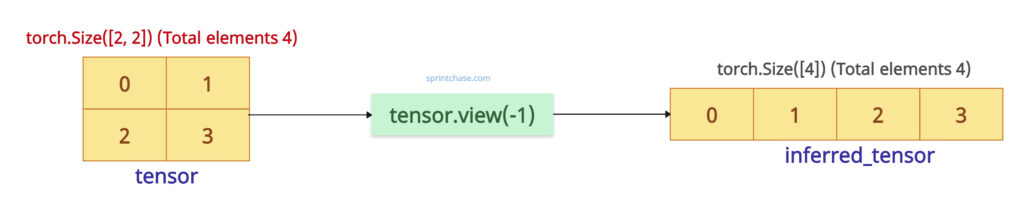

Inferring Dimensions with -1

Use view(-1) when you need to collapse all the dimensions into a single one.

For example, in the above section, we converted a 1D tensor to a 2D tensor, using view(-1), we can convert a 2D tensor to a 1D tensor without changing or copying the data.

import torch tensor = torch.randn(2, 2) print(tensor.shape) # Output: torch.Size([2, 2]) inferred_tensor = tensor.view(-1) print(inferred_tensor.shape) # Output: torch.Size([4])

The total number of elements must match: 4 = 2 × 2 (same as before).

Flattening a Multi‑Dimensional Tensor

Let’s flatten [batch, C, H, W] → [batch, C*H*W].

import torch batch = torch.randn(8, 3, 32, 32) print(batch.shape) # Output: torch.Size([8, 3, 32, 32]) flatten = batch.view(batch.size(0), -1) print(flatten.shape) # Output: torch.Size([8, 3072])

Adding or Removing Dimensions

You can use .unsqueeze() or .view() to add or remove dimensions from the input tensor.

import torch tensor_1d = torch.tensor([1, 2, 3]) print(tensor_1d.shape) # Output: torch.Size([3]) viewed_tensor = tensor_1d.view(1, 1, 3) print(viewed_tensor.shape) # Output: torch.Size([1, 1, 3]) # OR unsqueezed_tensor = tensor_1d.unsqueeze(0).unsqueeze(0) print(unsqueezed_tensor.shape) # Output: torch.Size([1, 1, 3])

Handling non-contiguous tensors

To check if a tensor is contiguous, you can use the “.is_contiguous()” method. It returns True if it is contiguous and False otherwise.

As we know, we can’t pass a non-contiguous tensor to the .view() function. But for the deep understanding, let’s do it and see what happens:

import torch non_contiguous_tensor = torch.randn(3, 4).t() print(non_contiguous_tensor.is_contiguous()) # Output: False viewed_tensor = non_contiguous_tensor.view() print(viewed_tensor) # Output: TypeError: view() received an invalid combination of arguments - got (), but expected one of: # * (torch.dtype dtype) # * (tuple of ints size)

The above output shows that we got the TypeError: view() received an invalid combination of arguments – got (), but expected one of. To fix this error, we need to convert an input tensor from non-contiguous to contiguous using the .contiguous() method and then pass that result into the .view() method.

import torch non_contiguous_tensor = torch.randn(3, 4).t() viewed_tensor = non_contiguous_tensor.contiguous().view(12) print(viewed_tensor.shape) # Output: torch.Size([12])That’s all!