The torch.abs() method in PyTorch calculates the absolute value of each element of an input tensor. That input tensor can be anything from a real number to a complex.

- If it is a real number, it will return a non-negative value. For example, if it is a negative number, it will return its positive, and a positive number remains the same.

- If it is a complex tensor, it calculates each element’s magnitude (Euclidean norm).

This method is helpful in scenarios such as loss calculations, distance metrics, or preprocessing data for gradient-based optimization. It works on CPU or GPU.

Syntax

torch.abs(input: Tensor, out: Optional[Tensor])

Parameters

| Argument | Description |

| input (Tensor) | It is an input tensor containing scalar or vector values for which we need to calculate the absolute value. It can be a real or complex tensor. Its type includes int, float, or double. |

| out (Tensor, optional) | It is an output tensor in which we store the result. If you have a pre-defined tensor, you can store the absolute value in this tensor using the “out” argument. |

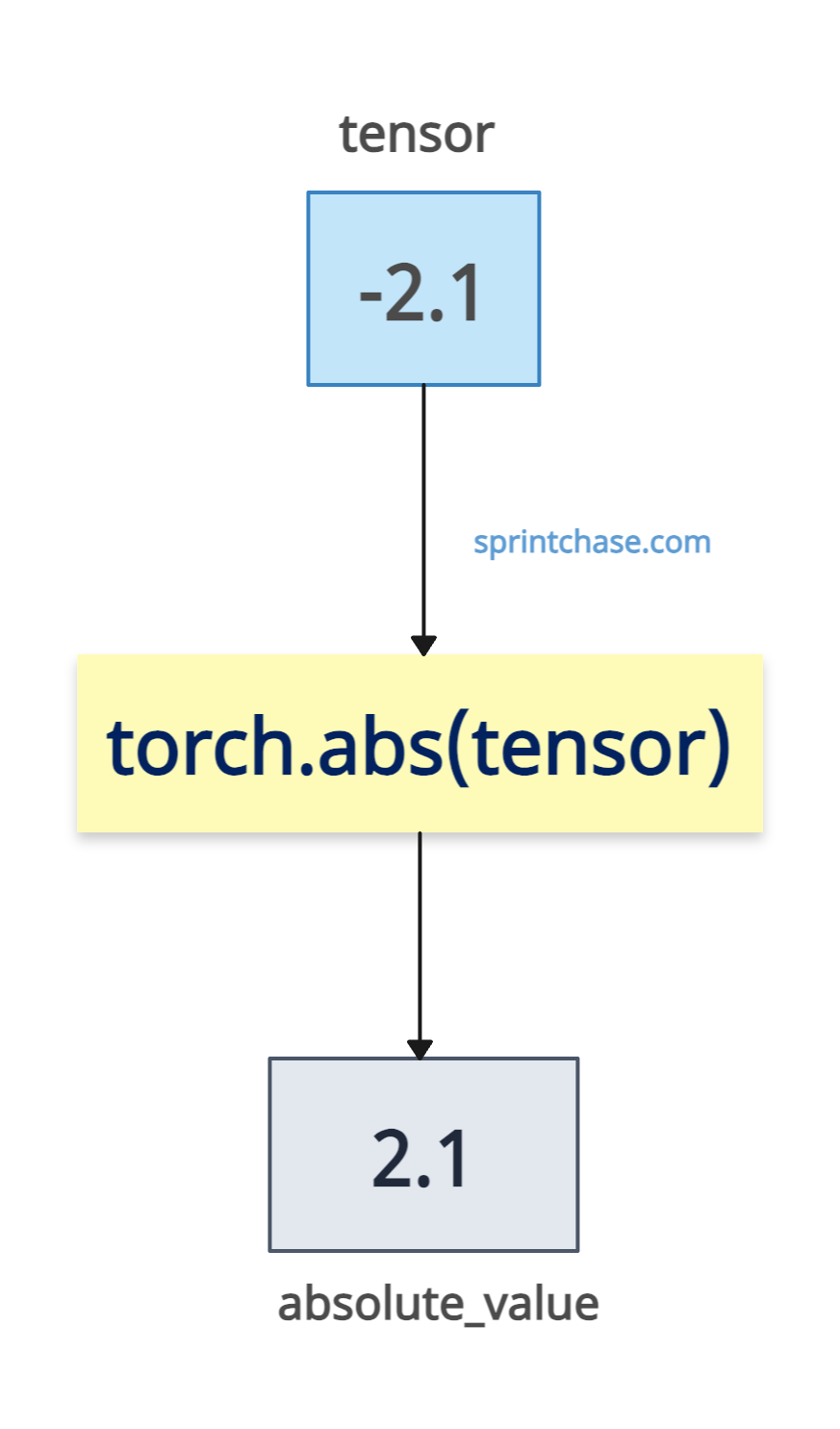

Calculating the absolute value of a Scalar Tensor

There is a difference between a scalar tensor and a 1D tensor. In a scalar tensor, a tensor has no dimensions (rank 0) and contains a single value, while a 1D tensor is a rank one tensor, which is also a vector with one dimension, containing multiple values.

import torch # Defining a scalar tensor tensor = torch.tensor(-2.1) absolute_value = torch.abs(tensor) print(absolute_value) # Output: tensor(2.1000)

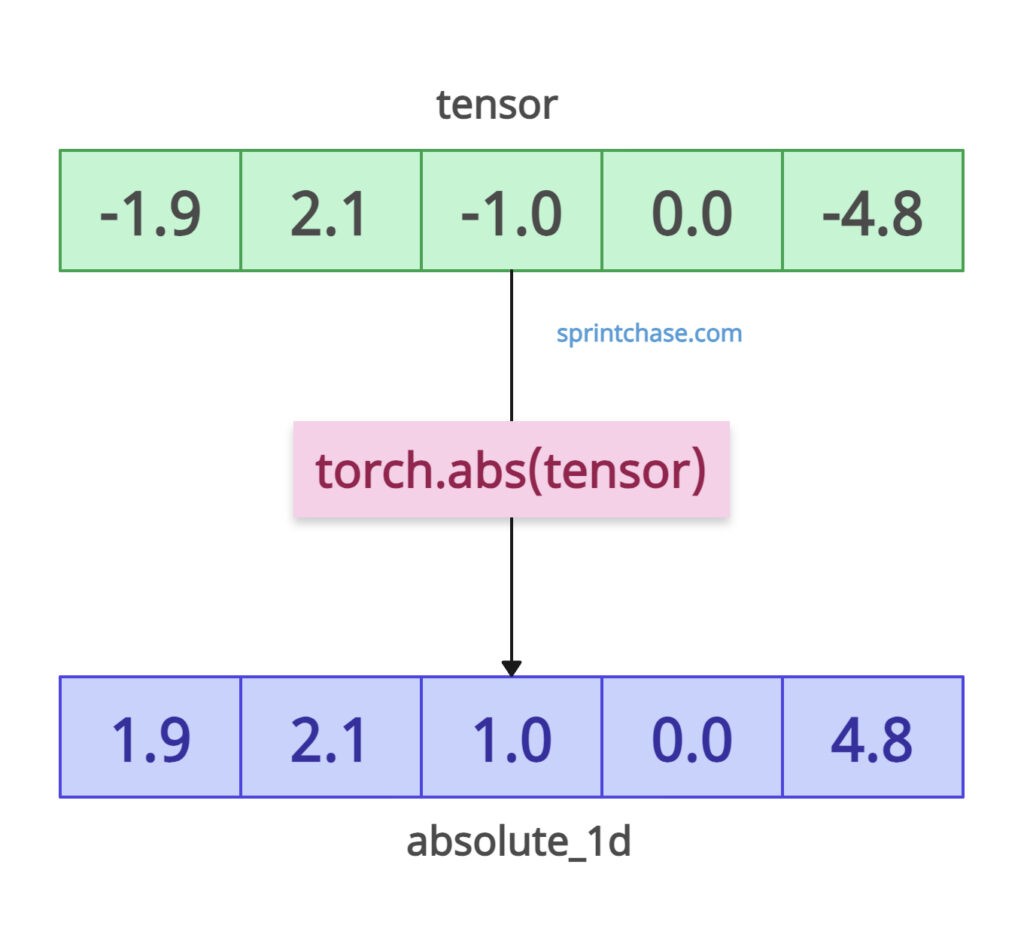

1D Tensor

Let’s define a 1D tensor with mixed (negative and positive) values.

import torch # Input tensor tensor = torch.tensor([-1.9, 2.1, -1.0, 0.0, -4.8]) absolute_1d = torch.abs(tensor) print(absolute_1d) # Output: tensor([1.9000, 2.1000, 1.0000, 0.0000, 4.8000])

Each element’s sign is removed, and the magnitude has been preserved, while 0.00 remains 0.0000.

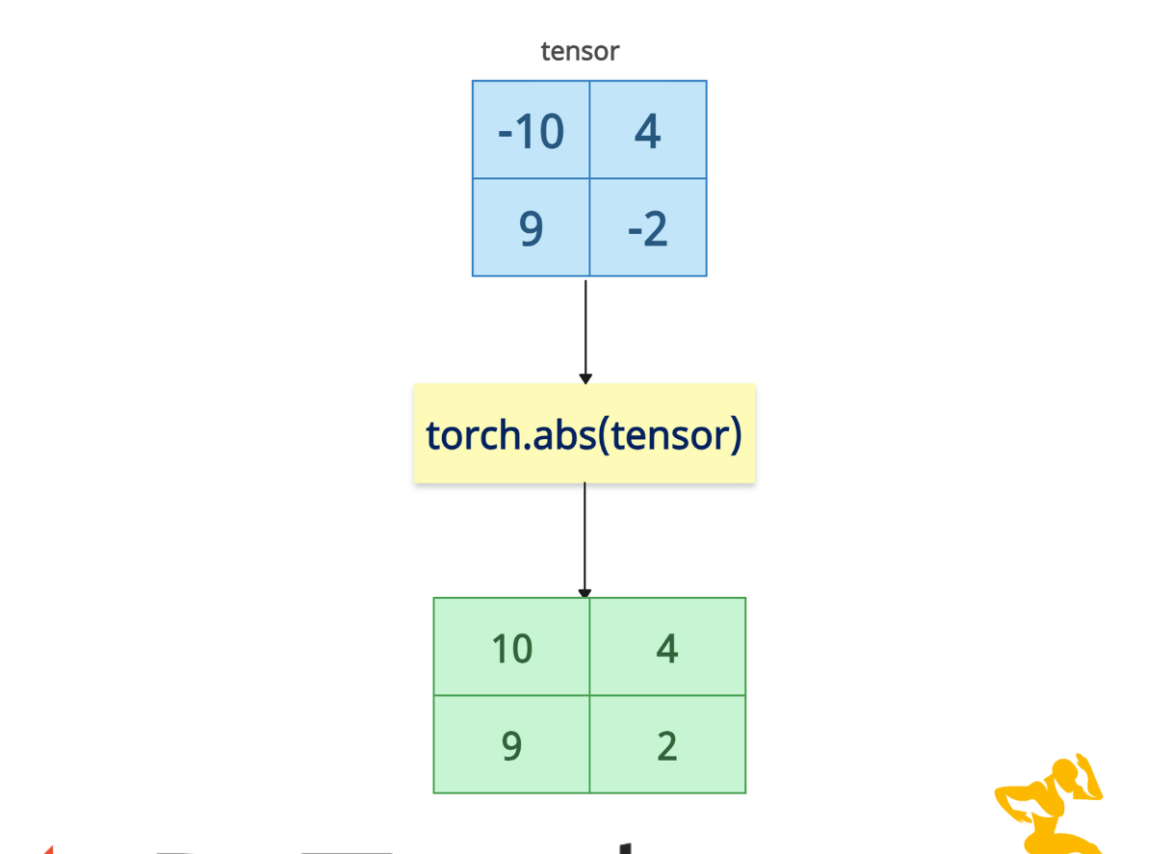

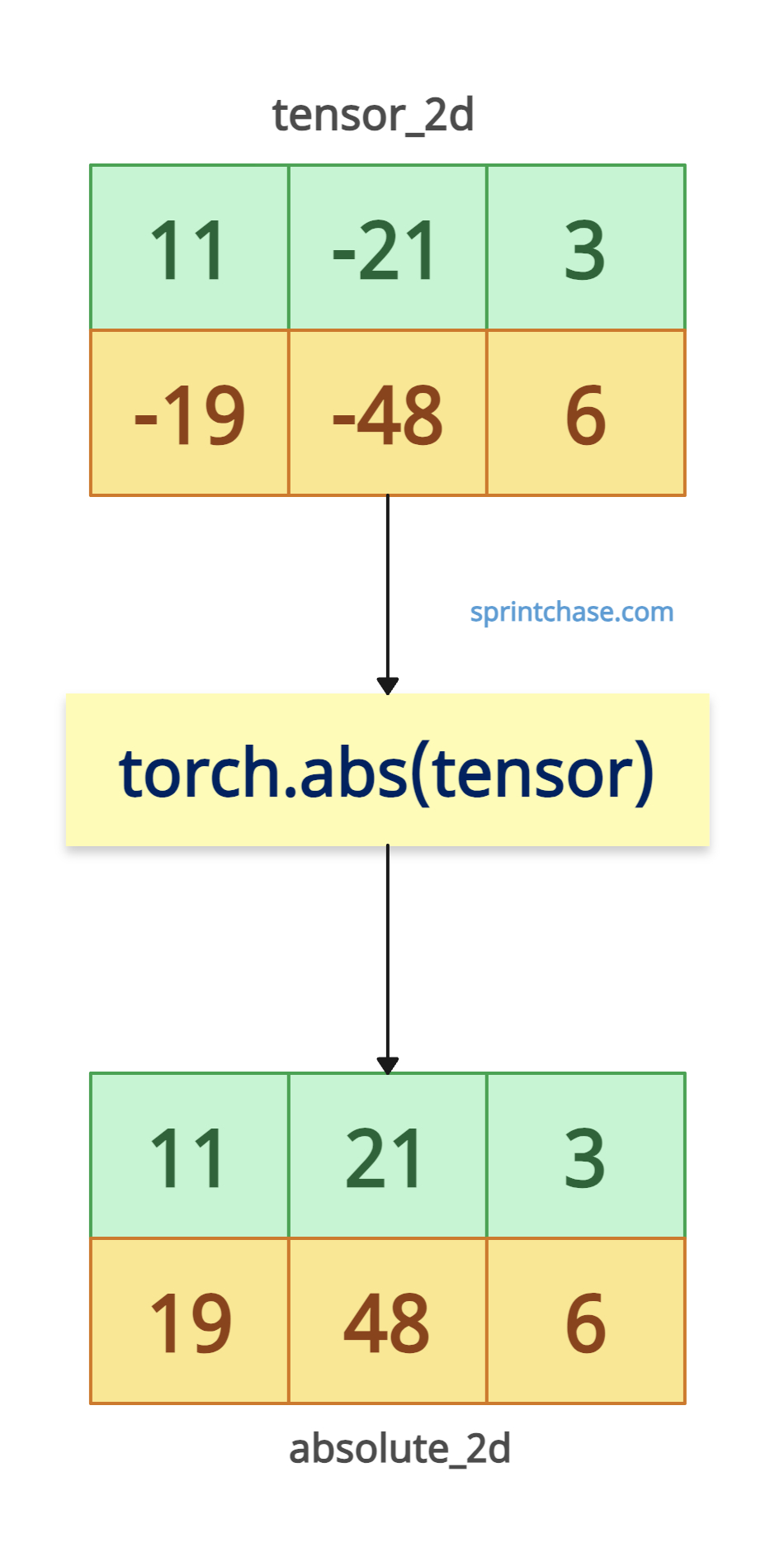

2D or multidimensional Tensor

This method operates elementwise across the dimensions, returning a tensor in which each value is the absolute value without any sign.

import torch tensor_2d = torch.tensor([[11, -21, 3], [-19, -48, 6]]) absolute_2d = torch.abs(tensor_2d) print(absolute_2d) # Output: # tensor([[11, 21, 3], # [19, 48, 6]])The above output shows that it preserves the original shape of the input tensor.

Complex Tensors

For complex tensors, the .abs() method calculates the magnitude: |a + bj| = sqrt(a² + b²).

import torch

complex_tensor = torch.tensor([2+2j, 1-9j], dtype=torch.complex64)

try:

complex_abs = torch.abs(complex_tensor)

print(complex_abs)

except RuntimeError as e:

print(e)

# Output: tensor([2.8284, 9.0554])

Usage the “out” Parameter

To efficiently use the “out” argument, pre-allocate a new tensor using torch.empty() function and then assign the result of absolute value to this tensor.

import torch tensor_2d = torch.tensor([11, -21, 3]) pre_allocated = torch.empty(3, dtype=torch.int64) torch.abs(tensor_2d, out=pre_allocated) print(pre_allocated) # Output: tensor([11, 21, 3])

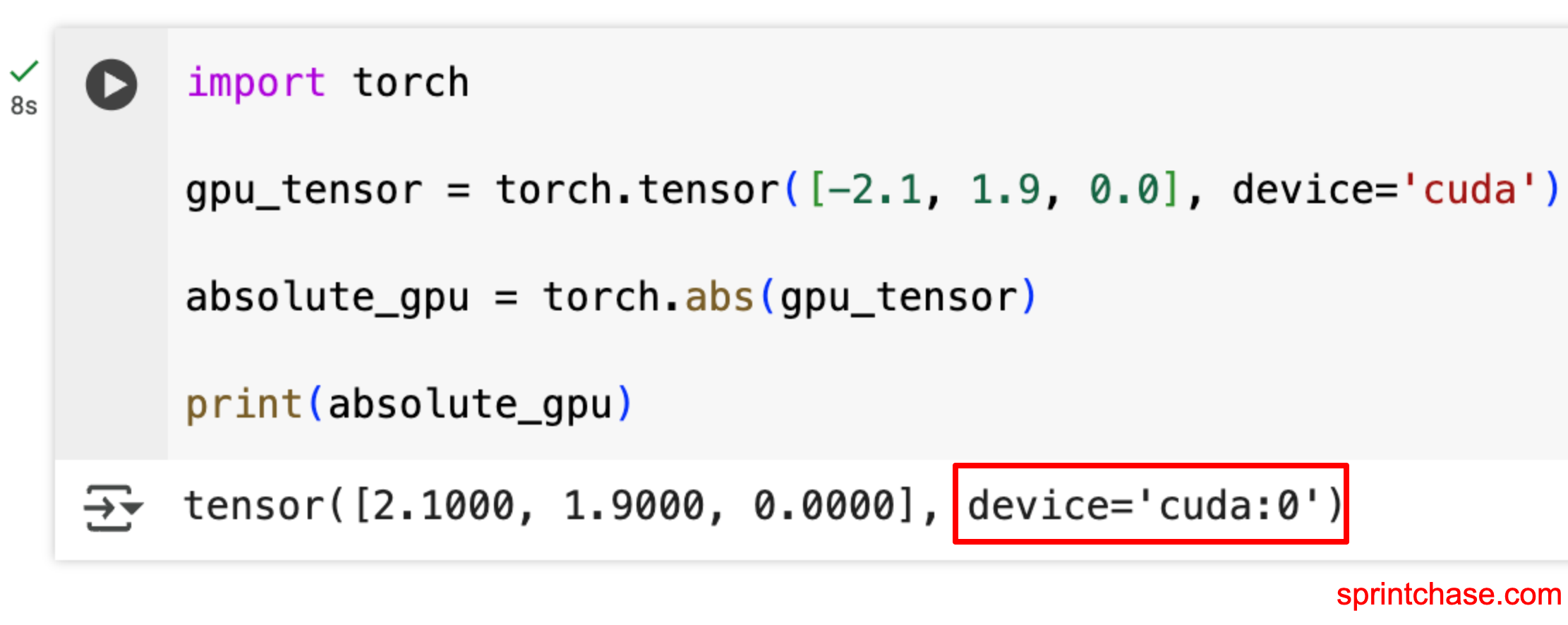

GPU Tensor

If you are connected to a GPU, you can create an input tensor on CUDA and then calculate its absolute value.

import torch gpu_tensor = torch.tensor([-2.1, 1.9, 0.0], device='cuda') absolute_gpu = torch.abs(gpu_tensor) print(absolute_gpu)

In-place Operation with a torch.abs_()

For memory efficiency, you can use torch.abs_() method to modify the tensor directly.

import torch tensor = torch.tensor([-2.1, -1.9, 0.0]) # Using in-place operation to modify the tensor tensor.abs_() print(tensor) # Output: tensor([2.1000, 1.9000, 0.0000])

In this case, the original tensor is modified, and no new tensor is created, so no new memory is allocated.

Application in L1 Loss Calculation

One of the main real-time applications is calculating the L1 loss (mean absolute error):

import torch predicted = torch.tensor([2.5, 0.0, 2.1]) target = torch.tensor([3.0, -0.5, 2.0]) absolute_diff = torch.abs(predicted - target) l1_loss = absolute_diff.mean() print(absolute_diff) # Output: tensor([0.5000, 0.5000, 0.1000]) print(l1_loss) # Output: tensor(0.3667)That’s all!