Optimal solution: Using torch.tensor() method

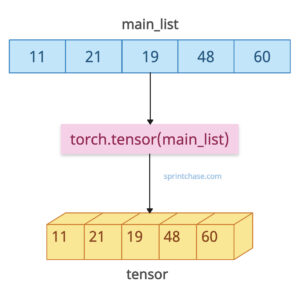

The most optimal and easiest way to convert a Python list to a PyTorch tensor is to use a torch.tensor() method. The .tensor() method creates a tensor out of list data. An input list can be single or multi-dimensional.

For example, if I have a list called main_list = [11, 21, 19, 48, 60], then using a torch.tensor(main_list) will give me a tensor([11, 21, 19, 48, 60]). It copies the data from a list and infers the dtype.

import torch main_list = [11, 21, 19, 48, 60] tensor = torch.tensor(main_list) print(tensor) # Output: tensor([11, 21, 19, 48, 60])

Data type and device specification

You can specify the data type for the tensor explicitly using the “dtype” argument.

Also, if you want to work with CUDA, you can specify the device as well using the device = “cuda” argument:

import torch main_list = [11, 21, 19, 48, 60] # Data Type and Device Specification # Float32 tensor on GPU tensor = torch.tensor(main_list, dtype=torch.float16, device='cuda') print(tensor) # Output: tensor([11., 21., 19., 48., 60.], device='cuda:0', dtype=torch.float16)

When you convert from a list of integers to tensors, by default, the tensor type might be torch.int64, but in deep learning, we often use float32 for model parameters.

So, specifying the dtype while creating the tensor is important. For example, torch.tensor(main_list, dtype=torch.float32).

Mixed data types

What if an input list contains different data types? How do we deal with that, because tensors allow only numeric types like integers or floats. What if a list has two different types: integers and floats?

PyTorch tensors require all elements to be the same type. So converting such a list would upcast the elements. That means, if a list contains integers and floats, the output tensor will have all the float elements.

import torch # Mixed Data Types mixed_list = [1, 2., 3.0, 4, 5] tensor_float = torch.tensor(mixed_list) print(tensor_float) # Output: tensor([1., 2., 3., 4., 5.])

Handling multi-dimensional lists

What if a list is multi-dimensional, like a list of lists? Well, it will create a 2D tensor. Just pass that list of lists to the torch.tensor() function and it will output 2D tensor.

import torch # Handling Multi-Dimensional Lists main_2D_list = [[11, 21], [18, 19]] tensor_2d = torch.tensor(main_2D_list) print(tensor_2d) # Output: tensor([[11, 21], # [18, 19]])

Incorrectly structured list

If the input is a nested list with sublists of varying lengths (i.e., different dimensions), it cannot be converted into a tensor. Instead, attempting to do so will result in the following error: ValueError: expected sequence of length 2 at dim 1 (got 1)

import torch ragged_list = [[1, 2], [3]] tensor_ragged = torch.tensor(ragged_list) print(tensor_ragged) # Output: ValueError: expected sequence of length 2 at dim 1 (got 1)

Empty list

If you try to convert an empty list to a tensor, the result is an empty tensor as well.

import torch # Empty Lists empty_list = [] tensor_empty = torch.tensor(empty_list) print(tensor_empty) # Output: tensor([])

Method 2: torch.as_tensor()

PyTorch as_tensor() method converts the input list into a tensor, but it avoids unnecessary memory duplication by sharing memory with the input whenever possible.

The resulting tensor shares memory with the original NumPy array. Any modifications to one will be reflected in the other.

import torch main_list = [11, 21, 19, 48, 60] # Zero-Copy Conversion with torch.as_tensor() tensor = torch.as_tensor(main_list) print(tensor) # Output: tensor([11, 21, 19, 48, 60])

This approach is especially helpful when working with NumPy arrays:

import torch import numpy as np main_list = [11, 21, 19, 48, 60] np_array = np.array(main_list) # # Shares memory with np_array tensor = torch.as_tensor(np_array) print(tensor) # Output: tensor([11, 21, 19, 48, 60])

For Python lists, torch.as_tensor() does not avoid copies.

Method 3: Conversion via NumPy (torch.from_numpy())

If you are already working with the numpy library, you can use the from_numpy() method because it is efficient for existing NumPy arrays (memory shared between NumPy and PyTorch).

import torch import numpy as np main_list = [11, 21, 19, 48, 60] # Conversion via NumPy (torch.from_numpy()) numpy_array = np.array(main_list) tensor_from_numpy = torch.from_numpy(numpy_array) print(tensor_from_numpy) # Output: tensor([11, 21, 19, 48, 60])

Method 4: Using the Constructor torch.Tensor()

It is less flexible than torch.tensor(). Uses default dtype (typically torch.float32).

import torch main_list = [11, 21, 19, 48, 60] # Using the Constructor torch.Tensor() tensor_constructor = torch.Tensor(main_list) print(tensor_constructor) # Output: tensor([11., 21., 19., 48., 60.])That’s all!