The torch.bernoulli() method draws binary random numbers (0 or 1) from the Bernoulli distribution, where each element in the output tensor is drawn independently based on the corresponding probability provided in the input tensor.

But what the heck is a Bernoulli distribution?

The Bernoulli distribution models a single trial with exactly two outcomes: “success” (usually denoted as 1) or “failure” (usually denoted as 0).

For example, a random variable x follows a Bernoulli distribution if it takes the value 1 with probability p (success) and the value 0 with probability 1−p (failure), where 0≤p≤1.

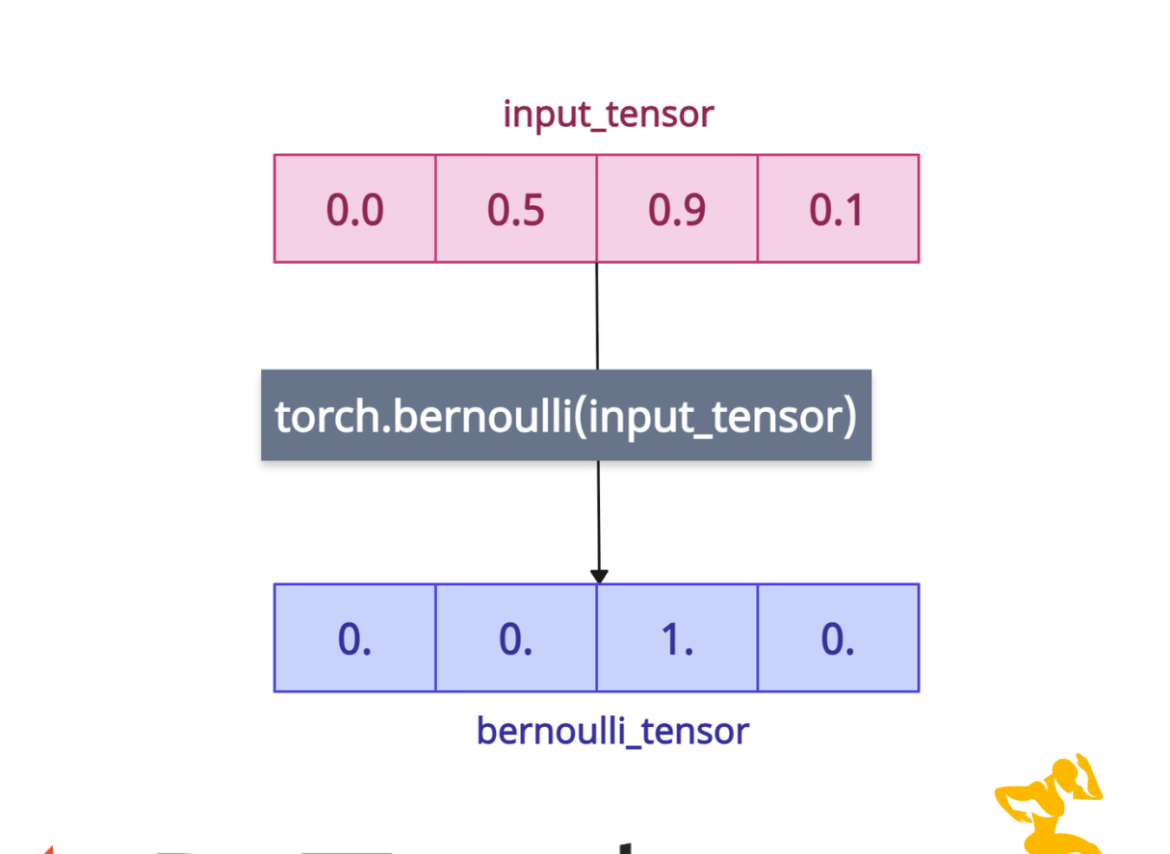

Each element in the tensor is treated as the probability of getting a 1.

If a value is 0.0, the probability of getting 1 is zero, so it always returns 0.

If a value is 1.0, the probability of getting 1 is one, so it always returns 1.

For values between 0 and 1 (such as 0.1 to 0.9), it returns 1 with that probability and zero otherwise.

Here is a brief explanation of what I am talking about:

-

0.0 → 0% chance of 1, so it is always zero.

-

0.5 → 50% chance of 1

-

0.9 → 90% chance of 1

-

0.1 → 10% chance of 1

-

0.3 → 30% chance of 1

-

0.6 → 60% chance of 1

-

1.0 → 100% chance of always 1

Returning to PyTorch, the output tensor has the same shape and data type as the input tensor.

Syntax

torch.bernoulli(input, generator=None, out=None)

Parameters

| Argument | Description |

| input (Tensor) |

It represents an input tensor of probabilities (values ∈ [0, 1]). The data type should be float or double. |

| generator (torch.Generator, optional) | It is a pseudorandom number generator for sampling. |

| out (Tensor, optional) | It stores the result of the Bernoulli values. By default, its value is None, but if you have a pre-allocated tensor, you can use this. |

Basic Bernoulli sampling

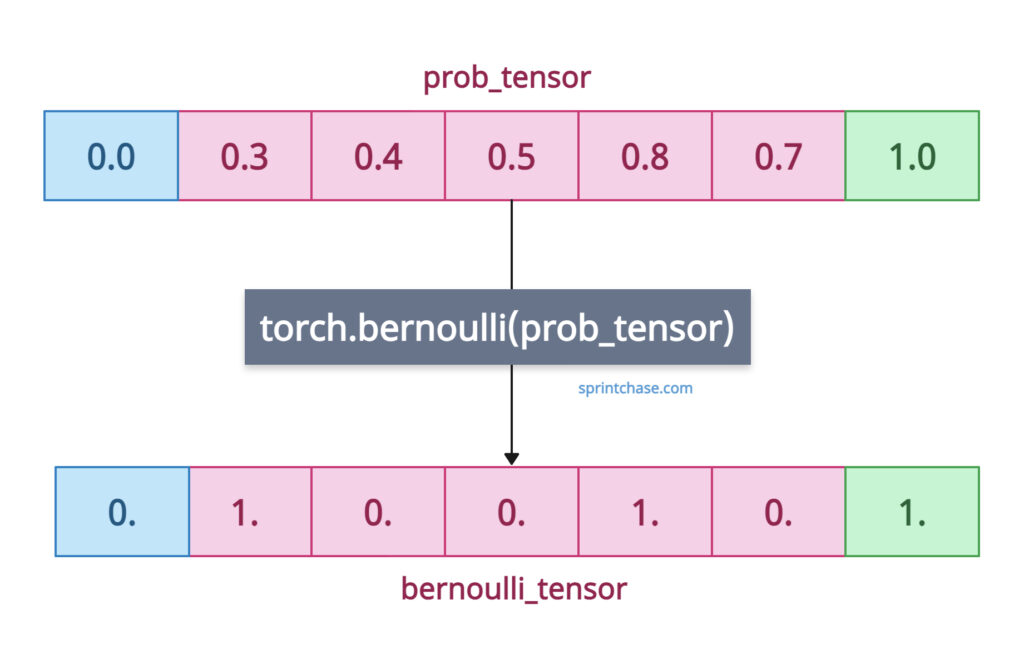

Let’s define a 1D tensor and generate a binary tensor based on given input probabilities.

import torch prob_tensor = torch.tensor([0.0, 0.3, 0.4, 0.5, 0.8, 0.7, 1.0]) print(prob_tensor) bernoulli_tensor = torch.bernoulli(prob_tensor) print(bernoulli_tensor) # Output: tensor([0., 1., 0., 0., 1., 0., 1.])

This output is random, and if you execute it multiple times, it will return different outputs.

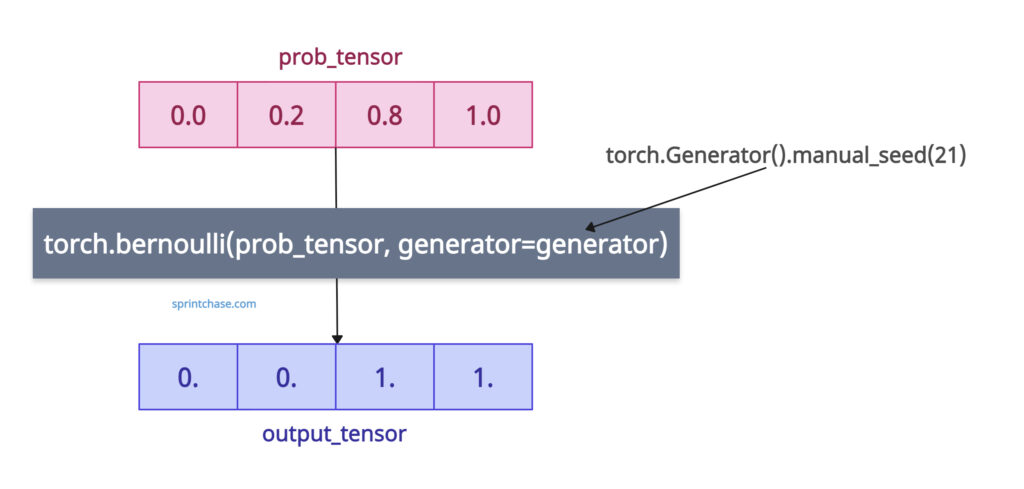

Reproducible sampling

We all know that to reproduce the same output on multiple executions, we need to define a specific random generator. Thankfully, the “generator” argument allows us to define a custom generator in which we can use the torch.manual_seed() method.

import torch # Setting up a generator with a fixed seed generator = torch.Generator().manual_seed(21) prob_tensor = torch.tensor([0.0, 0.2, 0.8, 1.0]) print(prob_tensor) # Output: tensor([0.0000, 0.2000, 0.8000, 1.0000]) # Sampling with generator output_tensor = torch.bernoulli(prob_tensor, generator=generator) print(output_tensor) # Output: tensor([0., 0., 1., 1.]) # Output: tensor([0., 0., 1., 1.]) # Output: tensor([0., 0., 1., 1.]) # (After executing multiple times, the output remains consistent due to the fixed seed.)Since we fixed the seed, we got the same output each time.

Usage with the output tensor

Let’s say I have created a pre-allocated tensor using torch.zeros() method.

Now, I want to store the results of the Bernoulli distribution in this tensor. How can I do that? Well, that’s where the “out” argument comes into play.

import torch probs = torch.tensor([0.4, 0.6, 0.9]) out = torch.zeros(3) # Pre-allocated output tensor torch.bernoulli(probs, out=out) print(out) # Output: tensor([0., 0., 1.])

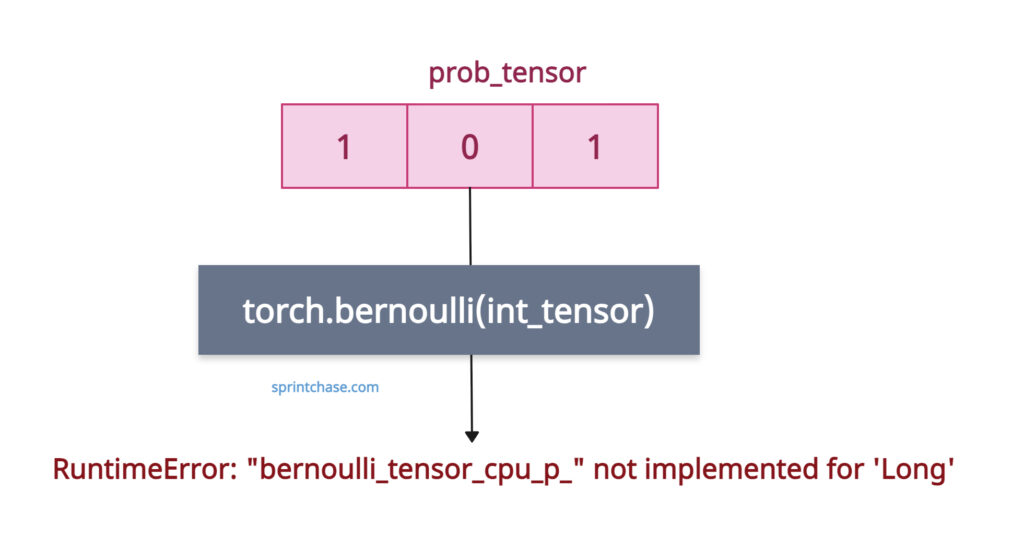

Integer input error

An integer tensor is not allowed, and if you pass it anyway, it will throw a RuntimeError.

import torch int_tensor = torch.tensor([1, 0, 1]) bernoulli_tensor = torch.bernoulli(int_tensor) print(bernoulli_tensor) # Exception: RuntimeError: "bernoulli_tensor_cpu_p_" not implemented for 'Long'

What if the Input > 1.0 or < 0.0

If the input value is greater than 1.0 or less than 0.0, it will throw the following error:

RuntimeError: Expected p_in >= 0 && p_in <= 1 to be true, but got false.

import torch prob_tensor = torch.tensor([10., 0.20, 20.8, 1.0]) print(prob_tensor) # Output: tensor([0.0000, 0.2000, 0.8000, 1.0000]) bernoulli_tensor = torch.bernoulli(prob_tensor) print(bernoulli_tensor) # Output: # Traceback (most recent call last): # File "/Users/krunallathiya/Desktop/Code/pythonenv/env/app.py", line 8, in <module> # bernoulli_tensor = torch.bernoulli(prob_tensor) # RuntimeError: Expected p_in >= 0 && p_in <= 1 to be true, but got false. # (Could this error message be improved? # If so, please report an enhancement request to PyTorch.)To avoid this type of error, ensure that your input >=0 and <=1.

That’s all!