The torch.clone() method creates a deep copy of a tensor in PyTorch. The cloned tensor has identical data, shape, and dtype but resides in a separate memory location.

Please note one thing: if you change the clone, it does not alter the original tensor because it creates a deep copy of it. In deep copy, changes do not reflect in the original tensor, whereas in soft copy, they do.

For gradient propagation, the clone is included in the computation graph.

Syntax

torch.clone(input, memory_format=torch.preserve_format)

Parameters

| Argument | Description |

| input (Tensor) | It is an input tensor. |

| memory_format (torch.memory_format, optional) |

It is the desired memory format for the output tensor. torch.preserve_format (default): It retains the input’s memory layout. torch.contiguous_format: It forces contiguous memory. torch.channels_last: It optimizes for ConvNets (NHWC). |

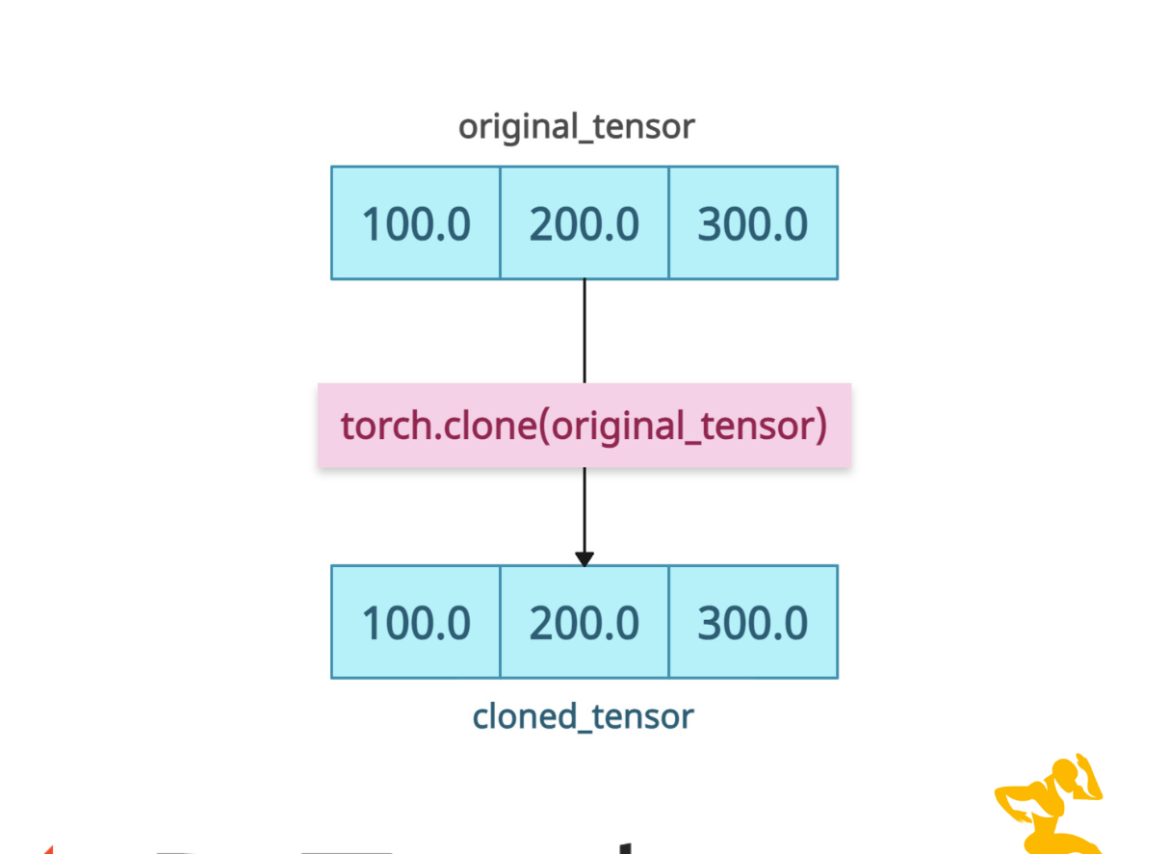

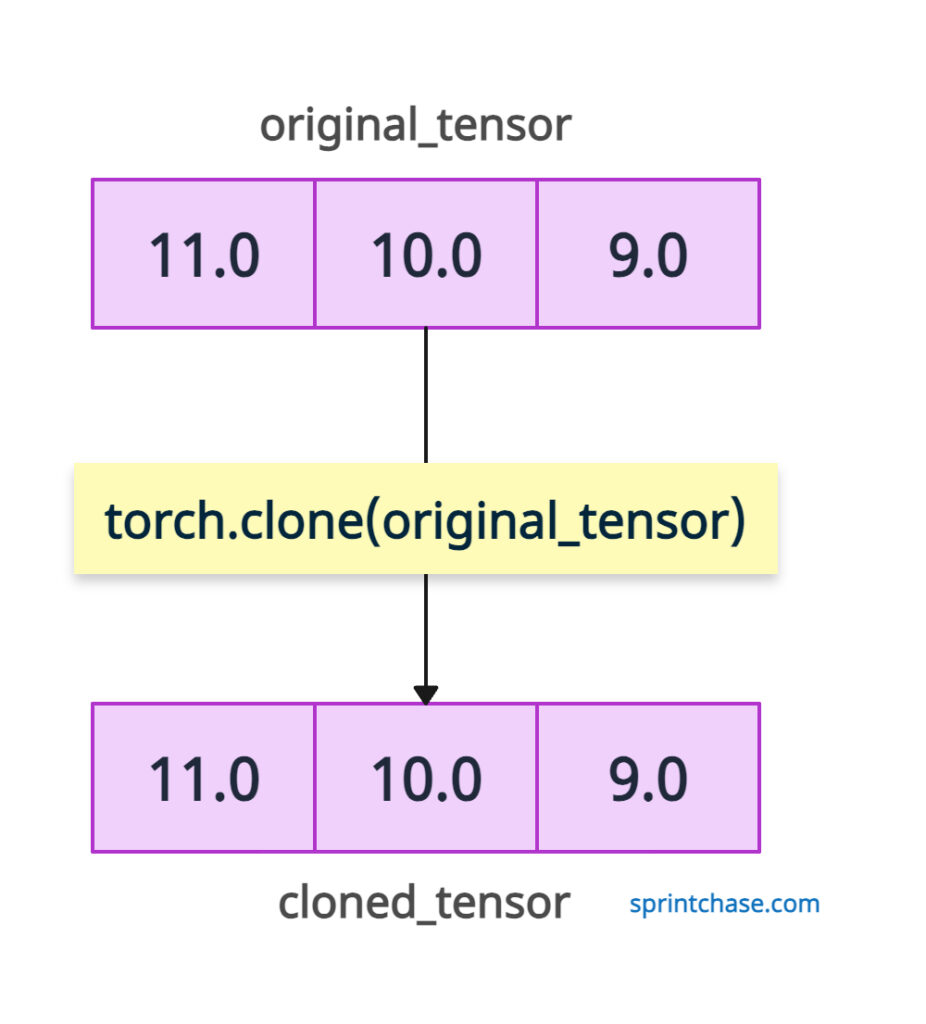

Copying a tensor

Let’s define an original tensor, and we will create a clone of it and check if it is a deep copy.

import torch

original_tensor = torch.tensor([11, 10, 9])

cloned_tensor = torch.clone(original_tensor)

print("Original Tensor:", original_tensor)

# Output: Original Tensor: tensor([11, 10, 9])

print("Cloned Tensor:", cloned_tensor)

# Output: Cloned Tensor: tensor([11, 10, 9])

# Modify the cloned tensor

cloned_tensor[0] = 100

print("After modifying cloned tensor:", cloned_tensor)

# Output: After modifying cloned tensor: tensor([100, 10, 9])

print("Original Tensor:", original_tensor)

# Output: Original Tensor: tensor([11, 10, 9])

In the above code, you can see that we cloned an original tensor, and its values are the same as the original.

After cloning, we modified the cloned tensor by replacing the value of the first element from 10 to 100 and verified that the changes were not reflected in the original tensor. Our original tensor remains unchanged, indicating a deep copy.

Memory layout control

We will define a 3D tensor with channels-last memory layout and then change it to the default layout and check its contiguousness using torch.is_contiguous() method.import torch

# Create a 3D tensor with channels-last memory format

x = torch.randn(3, 4, 1, 1).to(memory_format=torch.channels_last)

# Clone that preserves channels-last memory format

y = torch.clone(x) # Preserves original memory format (channels_last)

# Clone that forces contiguous memory format (default layout)

z = torch.clone(x, memory_format=torch.contiguous_format)

# Print memory format check

print("Is x channels_last? ", x.is_contiguous(

memory_format=torch.channels_last))

print("Is y channels_last? ", y.is_contiguous(

memory_format=torch.channels_last))

print("Is z contiguous? ", z.is_contiguous())

# Output:

# Is x channels_last? True

# Is y channels_last? True

# Is z contiguous? True

In the above code, we demonstrate how torch.clone() can preserve or change a tensor’s memory layout.

A tensor x is created with channels_last format, which is often optimized for performance on GPUs in convolutional models.

Cloning it normally (y) retains the same layout, while cloning with memory_format=torch.contiguous_format (z) converts it to the default layout.

This demonstrates how memory format control can be crucial for ensuring compatibility and optimizing performance in deep learning pipelines.

Gradient propagation

The clone is a differentiable operation. Gradients flow back to the original tensor.

import torch orig = torch.tensor([1.], requires_grad=True) cloned = torch.clone(orig) loss = cloned.sum() loss.backward() print(orig.grad) # Output: tensor([1.])That’s it!