The torch.add() method performs element-wise addition of two tensors or adds a scalar to a tensor, with optional scaling. With the help of broadcasting, it efficiently combines tensors while handling shape disparities.

There is a similar method called torch.sum(), but it does not perform element-wise addition like the add() method does. It performs the sum of all elements by doing reduction.

It supports Pytorch’s broadcasting to a standard shape, type promotion, and integer, float, and complex inputs. The output dtype is determined by type promotion rules; for example, adding float32 and int32 results in float32.

Here is the general formula for the addition:

outputi = inputi + alpha × otheri

If you are looking for alpha scaling, use the “alpha” argument, as described in the syntax section below.

Syntax

torch.add(input, other, alpha=1, out=None)

Parameters

| Argument | Description |

| input (Tensor) | It represents the first input tensor. |

| other (Tensor or Number) | It represents either a Tensor or a scalar value to add to the input tensor. |

| alpha (Number, optional) | By default, its value is one, but it is a scalar multiplier. |

| out (Tensor, optional) | It is an output tensor to store the result. By default, it is None. |

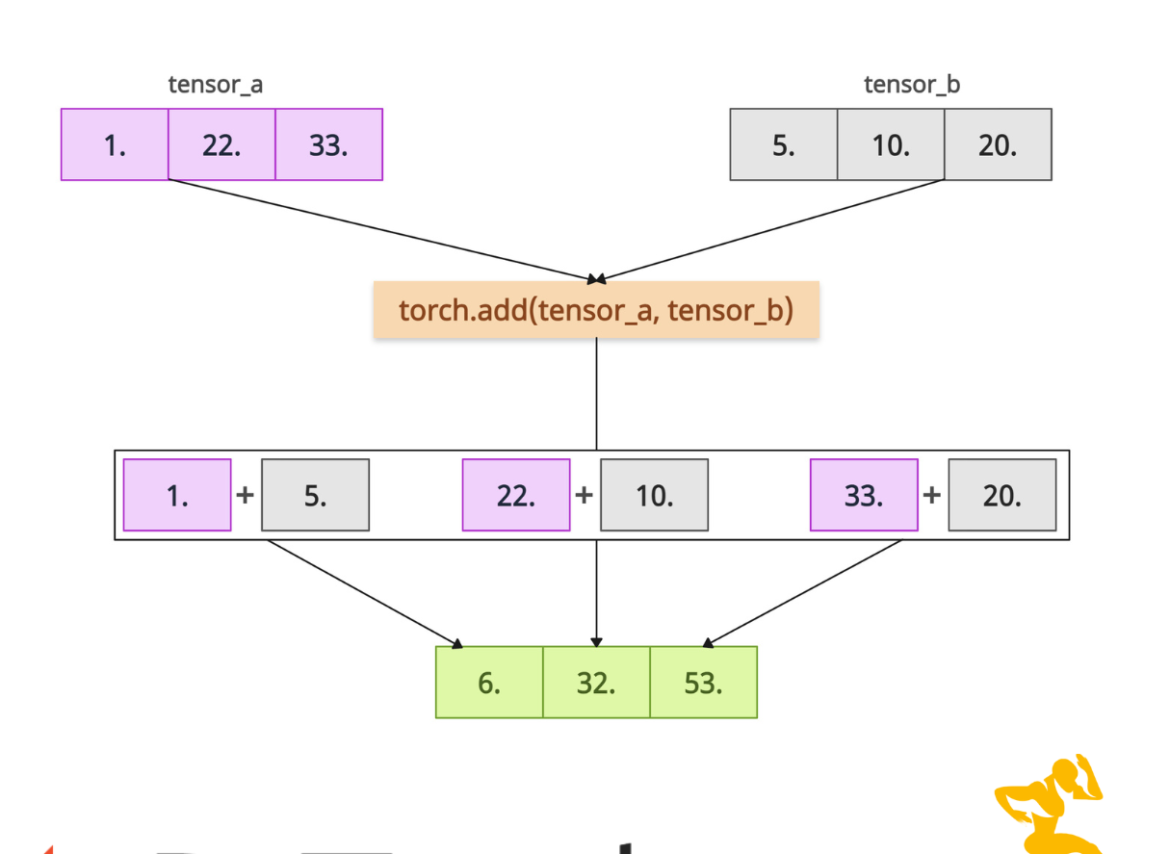

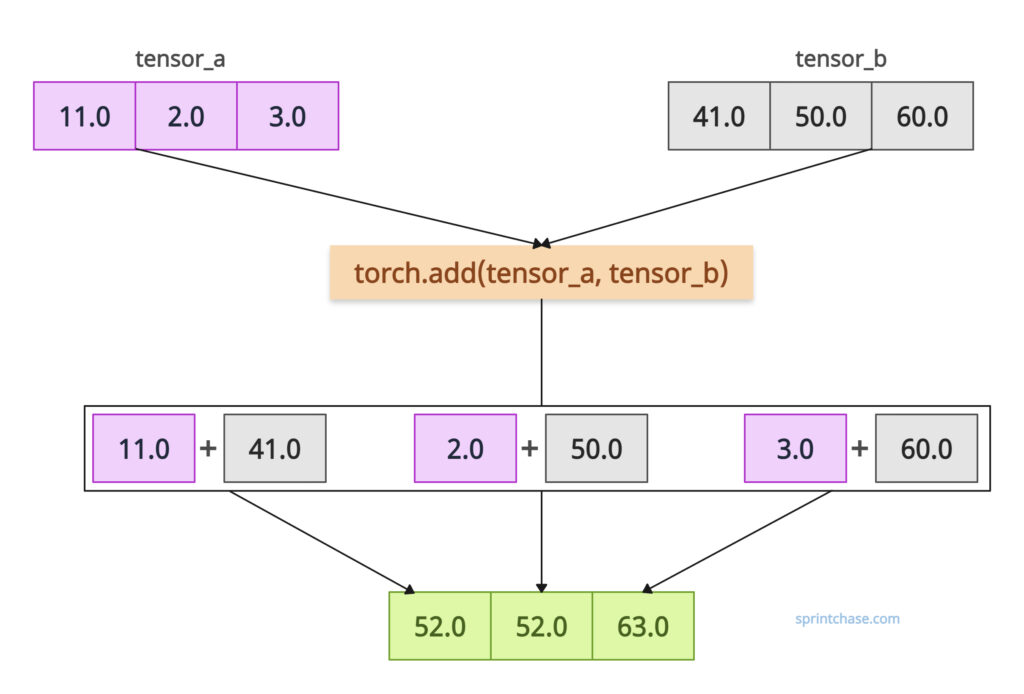

Element-wise addition

Let’s define two tensors with the same shape and dtype and perform element-wise addition.

import torch tensor_a = torch.tensor([11.0, 2.0, 3.0]) tensor_b = torch.tensor([41.0, 50.0, 60.0]) output_tensor = torch.add(tensor_a, tensor_b) print(output_tensor) # Output: tensor([52., 52., 63.])

What happens is that the addition operation is performed between the first element of the first tensor and the first element of the second tensor. So, 11.0 + 41.0 = 52. So, the output tensor’s first element is 52.

The same logic happens with the second element of the first tensor and the second element of the second tensor. Their summation is 52.0, and the third is 63.0.

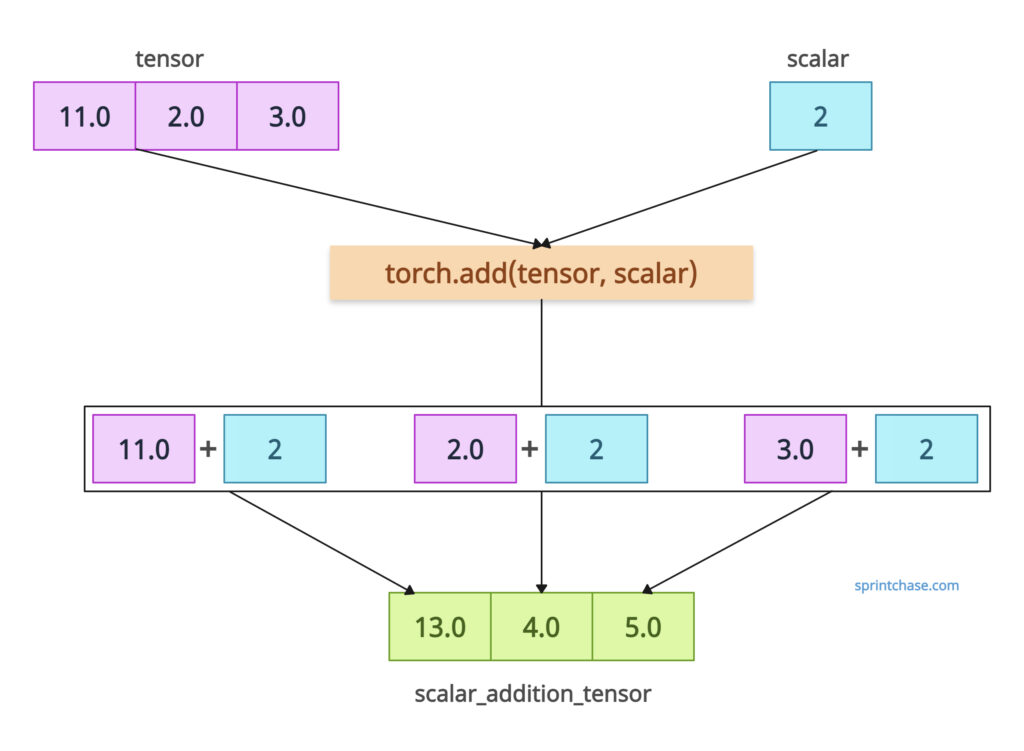

Scalar addition

If you add a single value or scalar to a tensor, it will broadcast the scalar to all elements.

import torch tensor = torch.tensor([11.0, 2.0, 3.0]) scalar = 2 scalar_addition_tensor = torch.add(tensor, scalar) print(scalar_addition_tensor) # Output: tensor([13., 4., 5.])In the above code, you can see that scalar 2 is added to each element of the tensor_a.

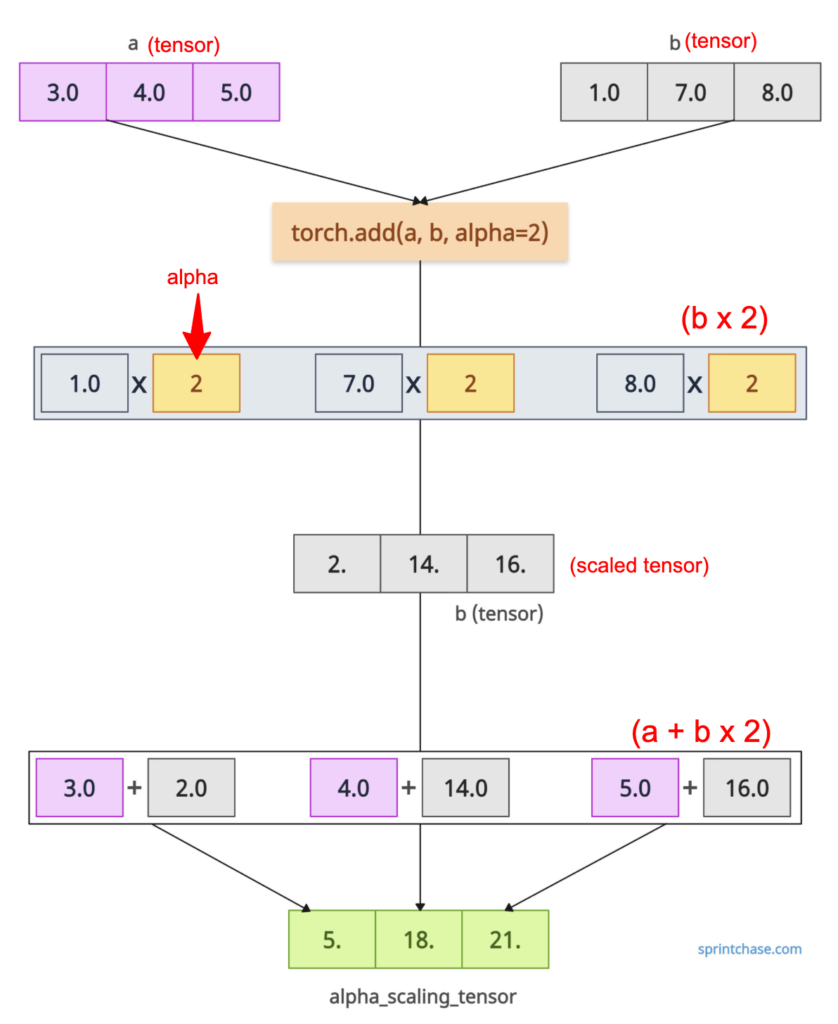

Alpha scaling

It provides an option to scale the second tensor with alpha before addition.

import torch a = torch.tensor([3.0, 4.0, 5.0]) b = torch.tensor([1.0, 7.0, 8.0]) alpha_scaling_tensor = torch.add(a, b, alpha=2) # (a + b x 2) print(alpha_scaling_tensor) # Output: tensor([ 5., 18., 21.])

In the above code, it calculates a + 2 * b, where b is scaled by alpha = 2 before addition. That means for the first element, 1.0 x 2 = 2.0 and 2.0 + 3.0 = 5.0. So, the first element of the output tensor is 5.

The same logic works for the second element of the output tensor. 7.0 x 2 = 14.0 and 14.0 + 4.0 = 18.0.

For the third element: 8.0 x 2 = 16.0, and 16.0 + 8.0 = 24.

Broadcasting

With compatible shapes, you can add tensors using the .add() method.import torch a = torch.tensor([[3.0, 4.0], [11.0, 14.0]]) b = torch.tensor([1.0, 2.0]) broadcasting_addition = torch.add(a, b) print(broadcasting_addition) # Output: # tensor([[ 4., 6.], # [12., 16.]])

In this example, b is broadcast to match a’s shape, adding [1.0, 2.0] to each row of a.

That’s it!